Wayne Wu

Ting-Wei Wu

Gatech ML PhD

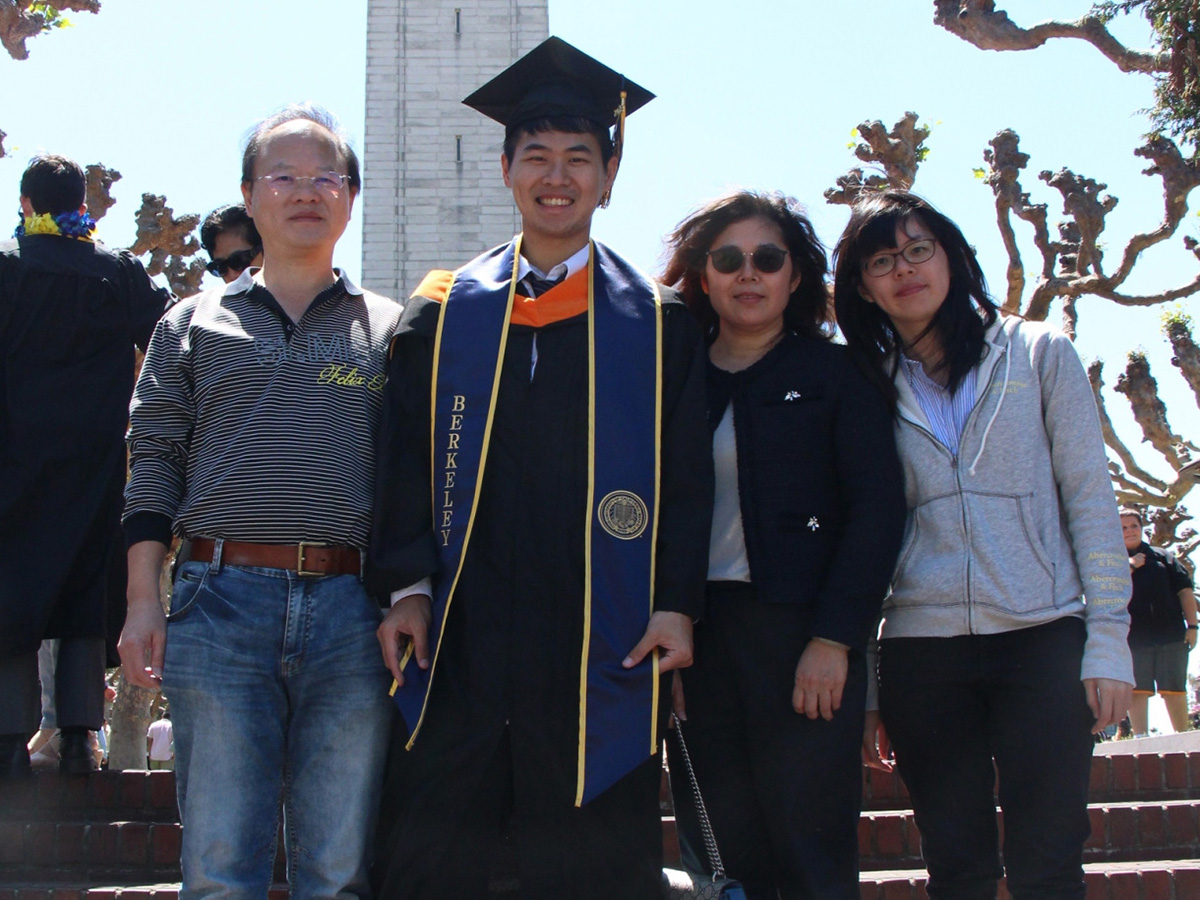

UC Berkeley BioE MEng

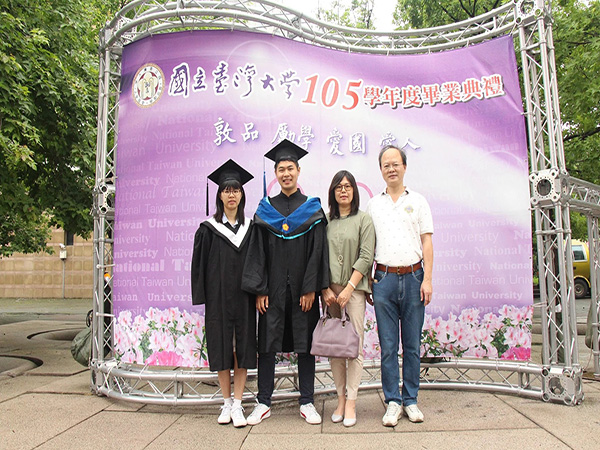

National Taiwan University EE MS/BS

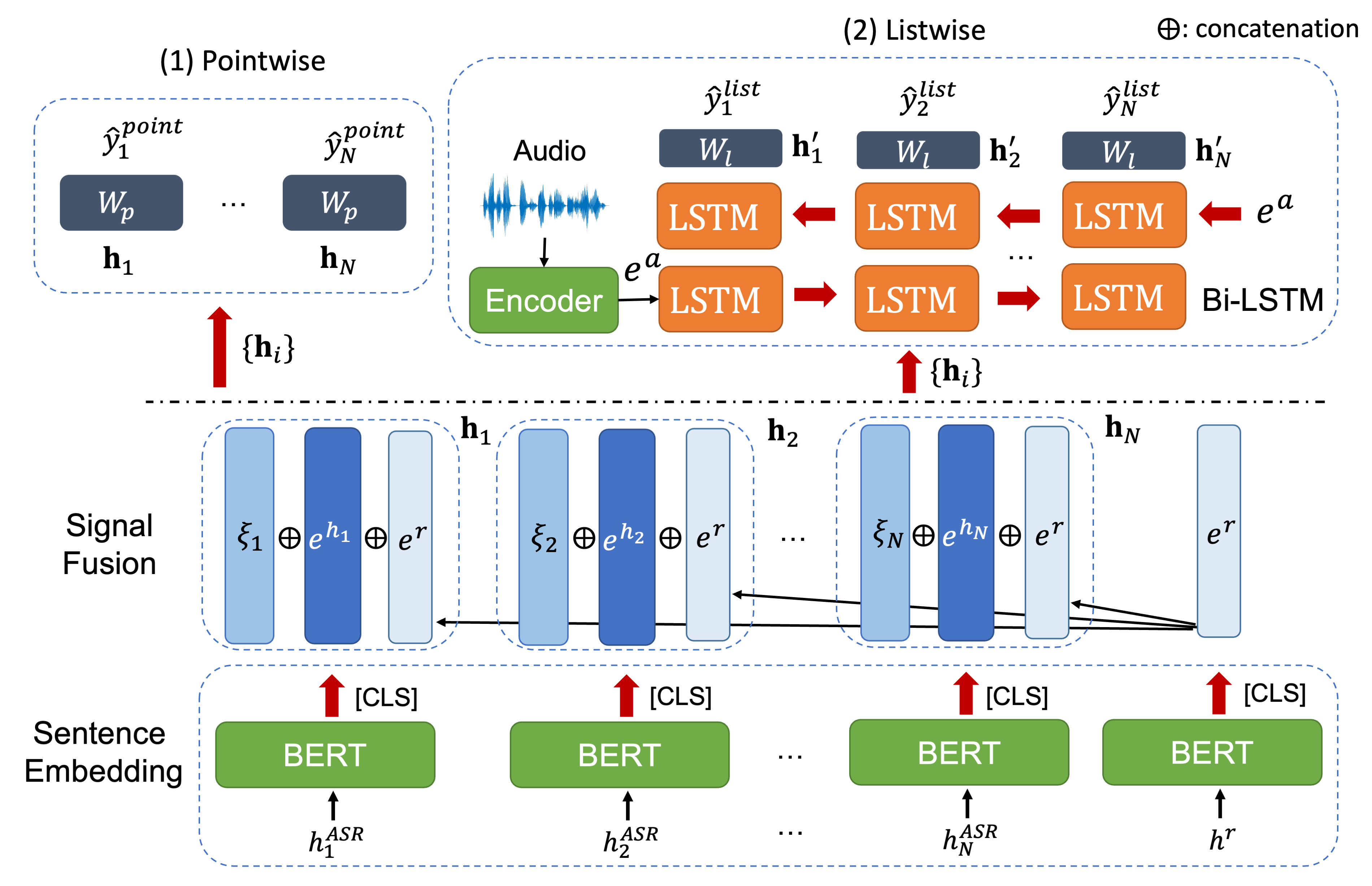

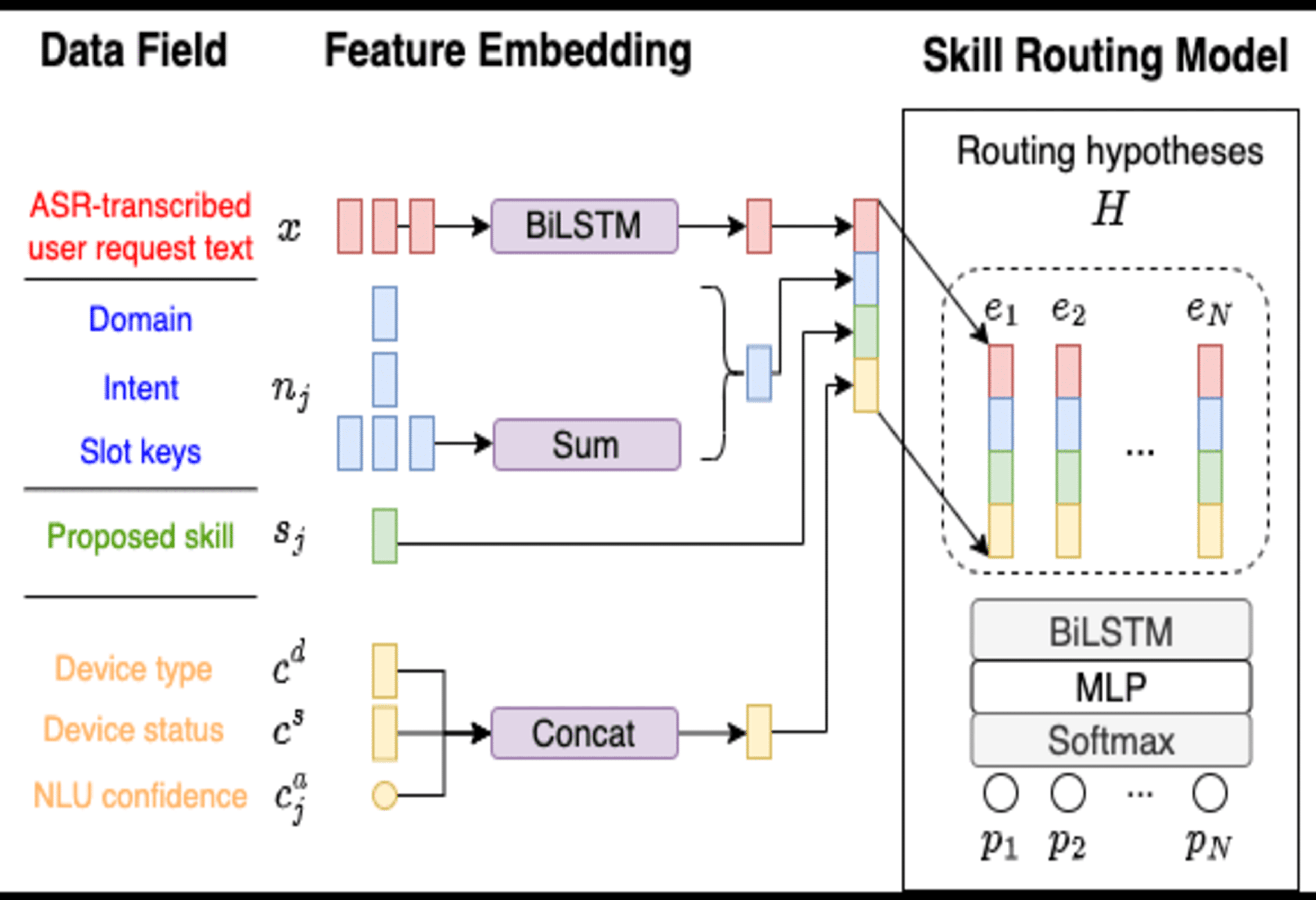

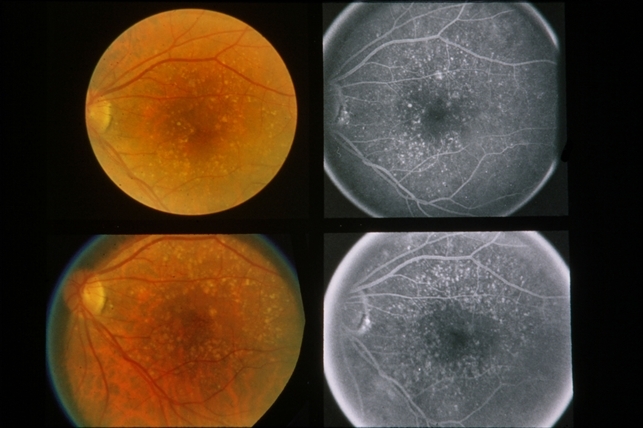

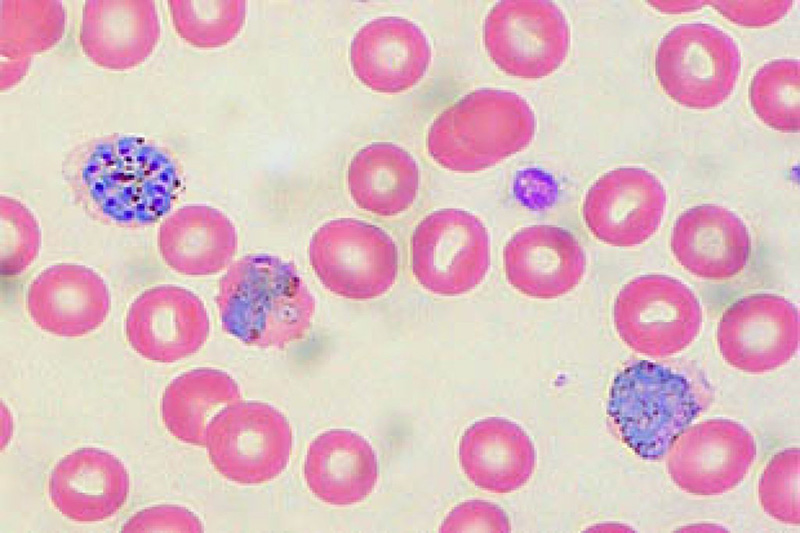

Conversational AI & Computer Vision

Conversational AI & Computer Vision

Latest News

Latest News